CNN Architectures(LeNet to DenseNet)

An in depth introduction to different State of the Art Convoutional Neural Networks

- Introduction

- What is ImageNet

- LeNet-5(1998)

- AlexNet(2012)

- ZFNet(2013)

- VggNet(2014)

- Inception Network (GoogleNet)(2014)

- ResNet(2015)

- ResNet-Wide

- DenseNet(2017)

- MobileNet

- References

Introduction

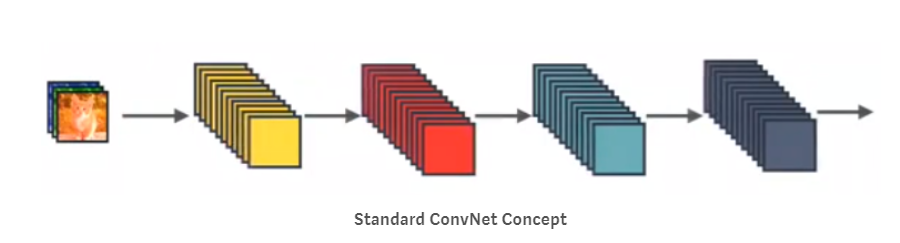

In this post,we will talk about some of the most important papers that have been published over the last 5 years and discuss why they’re so important.We will go through different CNN Architectures (LeNet to DenseNet) showcasing the advancements in general network architecture that made these architectures top the ILSVRC results.

What is ImageNet

ImageNet is formally a project aimed at (manually) labeling and categorizing images into almost 22,000 separate object categories for the purpose of computer vision research.

However, when we hear the term “ImageNet” in the context of deep learning and Convolutional Neural Networks, we are likely referring to the ImageNet Large Scale Visual Recognition Challenge, or ILSVRC for short.

The ImageNet project runs an annual software contest, the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), where software programs compete to correctly classify and detect objects and scenes.

The goal of this image classification challenge is to train a model that can correctly classify an input image into 1,000 separate object categories.

Models are trained on ~1.2 million training images with another 50,000 images for validation and 100,000 images for testing.

These 1,000 image categories represent object classes that we encounter in our day-to-day lives, such as species of dogs, cats, various household objects, vehicle types, and much more. You can find the full list of object categories in the ILSVRC challenge

When it comes to image classification, the ImageNet challenge is the de facto benchmark for computer vision classification algorithms — and the leaderboard for this challenge has been dominated by Convolutional Neural Networks and deep learning techniques since 2012.

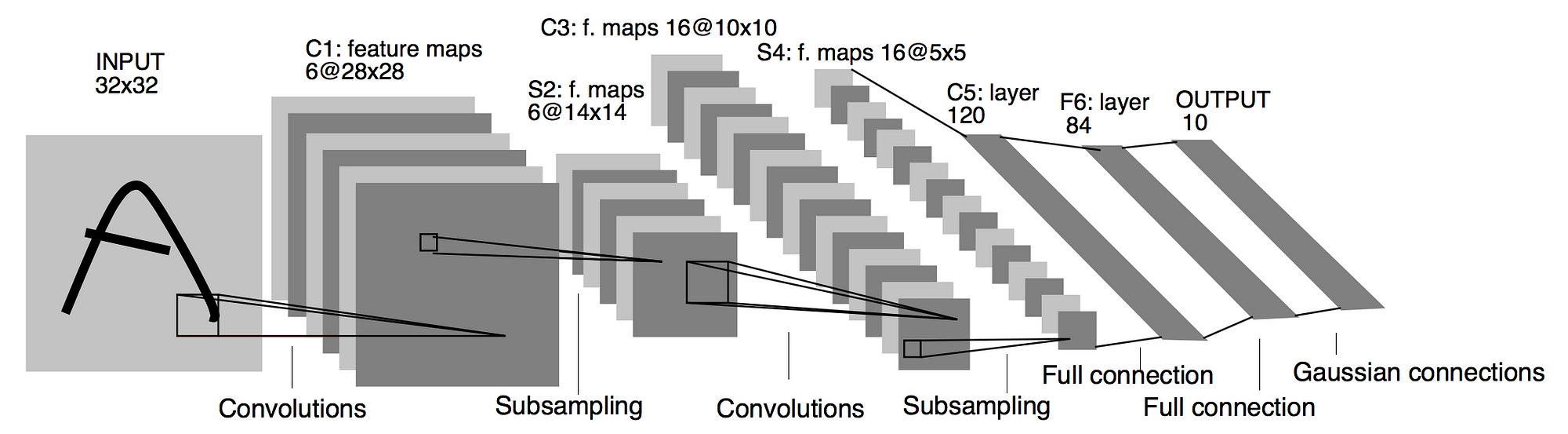

LeNet-5(1998)

Gradient Based Learning Applied to Document Recognition

- A pioneering 7-level convolutional network by LeCun that classifies digits,

- Found its application by several banks to recognise hand-written numbers on checks (cheques)

- These numbers were digitized in 32x32 pixel greyscale which acted as an input images.

- The ability to process higher resolution images requires larger and more convolutional layers, so this technique is constrained by the availability of computing resources.

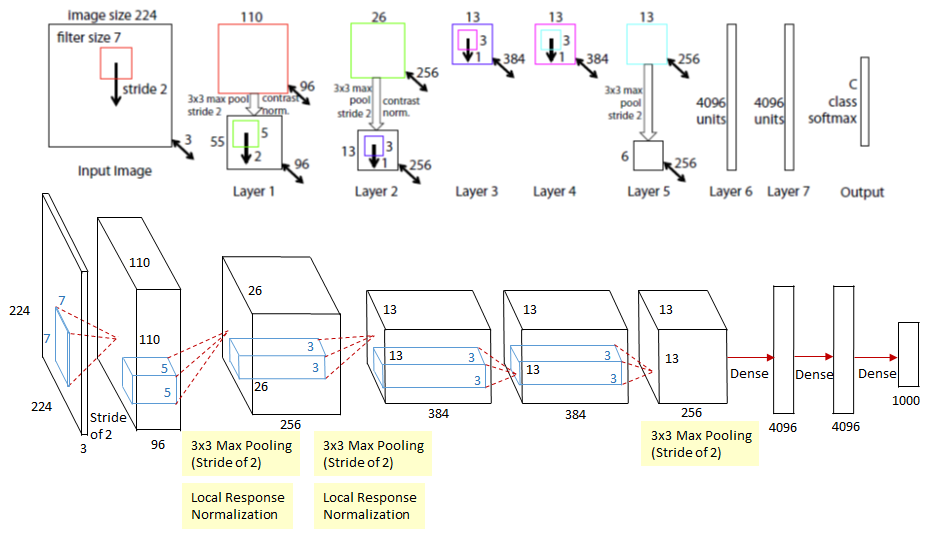

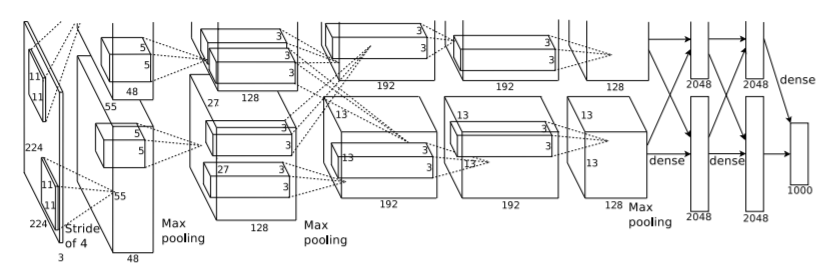

AlexNet(2012)

ImageNet Classification with Deep Convolutional Networks

- One of the most influential publications in the field by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton that started the revolution of CNN in Computer Vision.This was the first time a model performed so well on a historically difficult ImageNet dataset.

- The network consisted 11x11, 5x5,3x3, convolutions and made up of 5 conv layers, max-pooling layers, dropout layers, and 3 fully connected layers.

- Used ReLU for the nonlinearity functions (Found to decrease training time as ReLUs are several times faster than the conventional tanh function) and used SGD with momentum for training.

- Used data augmentation techniques that consisted of image translations, horizontal reflections, and patch extractions.

- Implemented dropout layers in order to combat the problem of overfitting to the training data.

- Trained the model using batch stochastic gradient descent, with specific values for momentum and weight decay.

- AlexNet was trained for 6 days simultaneously on two Nvidia Geforce GTX 580 GPUs which is the reason for why their network is split into two pipelines.

- AlexNet significantly outperformed all the prior competitors and won the challenge by reducing the top-5 error from 26% to 15.3%

ZFNet(2013)

Visualizing and Understanding Convolutional Neural Networks

This architecture was more of a fine tuning to the previous AlexNet structure by tweaking the hyper-parameters of AlexNet while maintaining the same structure but still developed some very keys ideas about improving performance.Few minor modifications done were the following:

- AlexNet trained on 15 million images, while ZF Net trained on only 1.3 million images.

- Instead of using 11x11 sized filters in the first layer (which is what AlexNet implemented), ZF Net used filters of size 7x7 and a decreased stride value. The reasoning behind this modification is that a smaller filter size in the first conv layer helps retain a lot of original pixel information in the input volume. A filtering of size 11x11 proved to be skipping a lot of relevant information, especially as this is the first conv layer.

- As the network grows, we also see a rise in the number of filters used.

- Used ReLUs for their activation functions, cross-entropy loss for the error function, and trained using batch stochastic gradient descent.

- Trained on a GTX 580 GPU for twelve days.

- Developed a visualization technique named Deconvolutional Network, which helps to examine different feature activations and their relation to the input space. Called deconvnet because it maps features to pixels (the opposite of what a convolutional layer does).

- It achieved a top-5 error rate of 14.8%

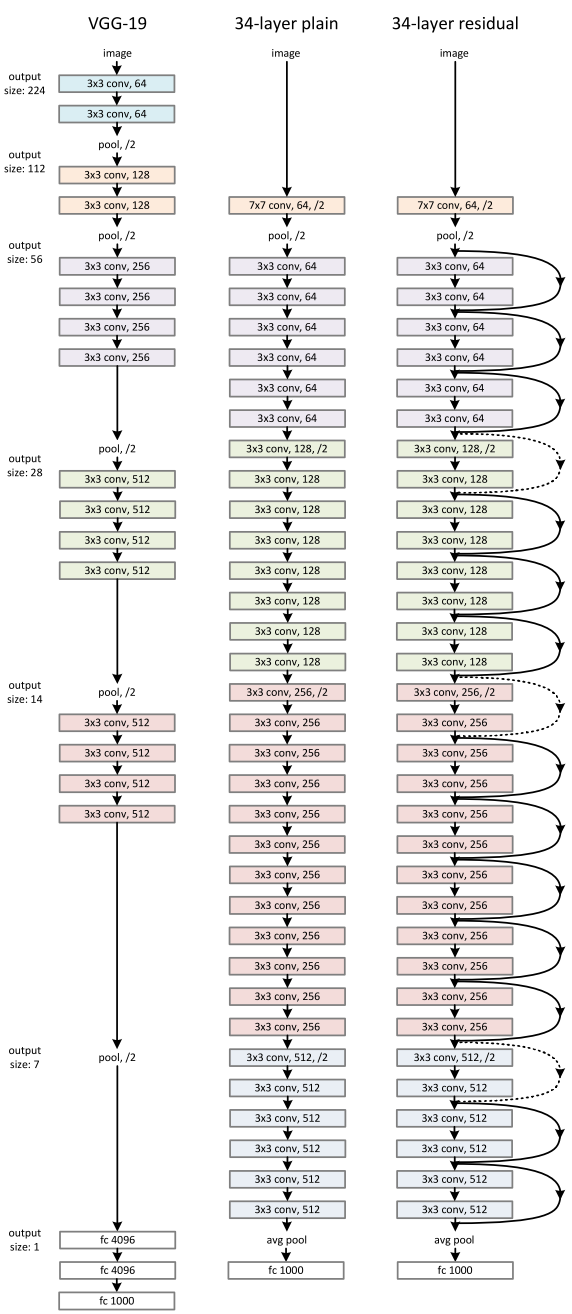

VggNet(2014)

VERY DEEP CONVOLUTIONAL NETWORKS FOR LARGE-SCALE IMAGE RECOGNITION

This architecture is well konwn for Simplicity and depth.. VGGNet is very appealing because of its very uniform architecture.They proposed 6 different variations of VggNet however 16 layer with all 3x3 convolution produced the best result.

Few things to note:

- The use of only 3x3 sized filters is quite different from AlexNet’s 11x11 filters in the first layer and ZF Net’s 7x7 filters. The authors’ reasoning is that the combination of two 3x3 conv layers has an effective receptive field of 5x5. This in turn simulates a larger filter while keeping the benefits of smaller filter sizes. One of the benefits is a decrease in the number of parameters. Also, with two conv layers, we’re able to use two ReLU layers instead of one.

- 3 conv layers back to back have an effective receptive field of 7x7.

- As the spatial size of the input volumes at each layer decrease (result of the conv and pool layers), the depth of the volumes increase due to the increased number of filters as you go down the network.

- Interesting to notice that the number of filters doubles after each maxpool layer. This reinforces the idea of shrinking spatial dimensions, but growing depth.

- Worked well on both image classification and localization tasks. The authors used a form of localization as regression (see page 10 of the paper for all details).

- Built model with the Caffe toolbox.

- Used scale jittering as one data augmentation technique during training.

- Used ReLU layers after each conv layer and trained with batch gradient descent.

- Trained on 4 Nvidia Titan Black GPUs for two to three weeks.

- It achieved a top-5 error rate of 7.3%

After Inception V1 ,the author proposed a number of upgrades which increased the accuracy and reduced the computational complexity.This lead to many new upgrades resulting in different versions of Inception Network :

- Inception v2

- Inception V3

Inception Network (GoogleNet)(2014)

Going Deeper with Convolutions

Prior to this, most popular CNNs just stacked convolution layers deeper and deeper, hoping to get better performance,however Inception Network was one of the first CNN architectures that really strayed from the general approach of simply stacking conv and pooling layers on top of each other in a sequential structure and came up with the Inception Module.The Inception network was complex. It used a lot of tricks to push performance; both in terms of speed and accuracy. Its constant evolution lead to the creation of several versions of the network. The popular versions are as follows:

- Inception v1.

- Inception v2 and Inception v3.

- Inception v4 and Inception-ResNet.

Each version is an iterative improvement over the previous one.Let us go ahead and explore them one by one

Inception V1

-

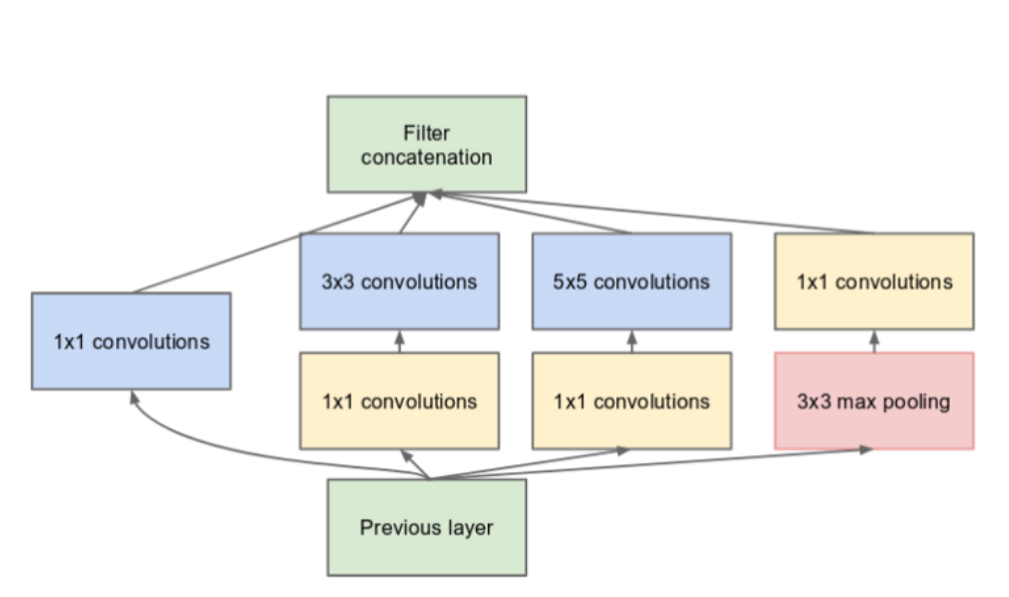

What is the right kernel size for convolution

A larger kernel is preferred for information that is distributed more globally, and a smaller kernel is preferred for information that is distributed more locally.

Ans- Filters with multiple sizes.The network essentially would get a bit “wider” rather than “deeper”

-

How to stack convolution which can be less computationally expensive

Stacking them naively computationally expensive.

Ans-Limit the number of input channels by adding an extra 1x1 convolution before the 3x3 and 5x5 convolutions

-

How to avoid overfitting in a very deep network

Very deep networks are prone to overfitting. It also hard to pass gradient updates through the entire network.

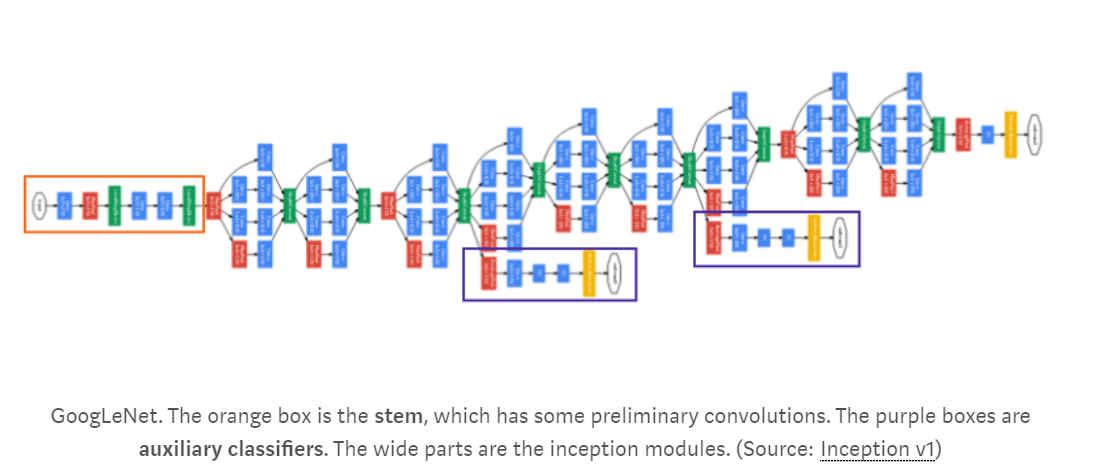

Ans-Introduce two auxiliary classifiers (The purple boxes in the image). They essentially applied softmax to the outputs of two of the inception modules, and computed an auxiliary loss over the same labels. The total loss function is a weighted sum of the auxiliary loss and the real loss.

The total loss used by the inception net during training.

total_loss = real_loss + 0.3 aux_loss_1 + 0.3 aux_loss_2

Points to note

- Used 9 Inception modules in the whole architecture, with over 100 layers in total! Now that is deep…

- No use of fully connected layers! They use an average pool instead, to go from a 7x7x1024 volume to a 1x1x1024 volume. This saves a huge number of parameters.

- Uses 12x fewer parameters than AlexNet.

- Trained on “a few high-end GPUs within a week”.

- It achieved a top-5 error rate of 6.67%

Inception V2

Rethinking the Inception Architecture for Computer Vision

Upgrades were targeted towards:

- Reducing representational bottleneck by replacing 5x5 convolution to two 3x3 convolution operations which further improves computational speed

The intuition was that, neural networks perform better when convolutions didn’t alter the dimensions of the input drastically. Reducing the dimensions too much may cause loss of information, known as a “representational bottleneck”

- Using smart factorization method where they factorize convolutions of filter size nxn to a combination of 1xn and nx1 convolutions.

For example, a 3x3 convolution is equivalent to first performing a 1x3 convolution, and then performing a 3x1 convolution on its output. They found this method to be 33% more cheaper than the single 3x3 convolution.

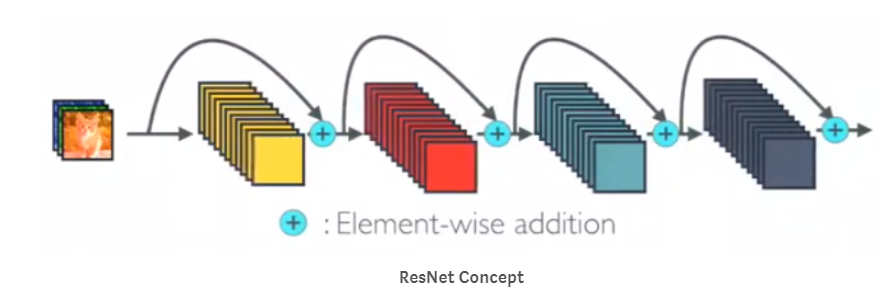

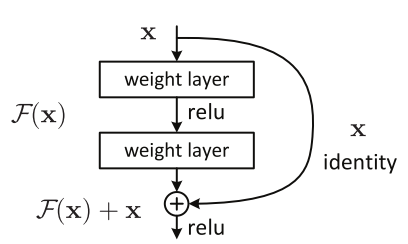

ResNet(2015)

Deep Residual Learning for Image Recognition

DenseNet(2017)

Densely Connected Convolutional Networks

It is a logical extension to ResNet.

From the paper: Recent work has shown that convolutional networks can be substantially deeper, more accurate, and efficient to train if they contain shorter connections between layers close to the input and those close to the output. In this paper, we embrace this observation and introduce the Dense Convolutional Network (DenseNet), which connects each layer to every other layer in a feed-forward fashion.

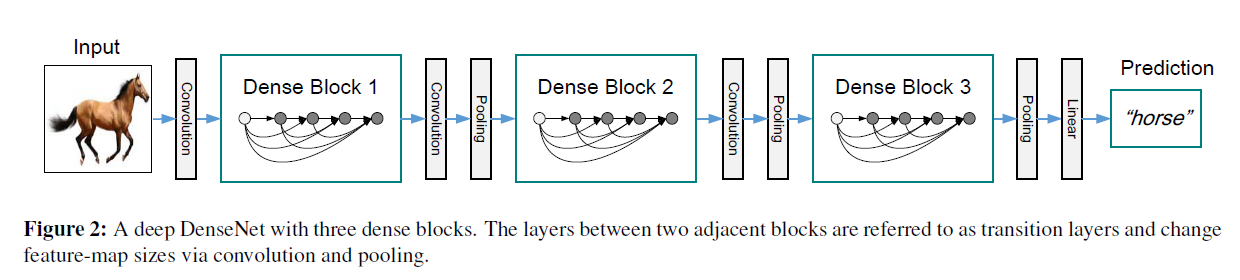

DenseNet Architecture

Let us explore different componenets of the network

1. Dense Block

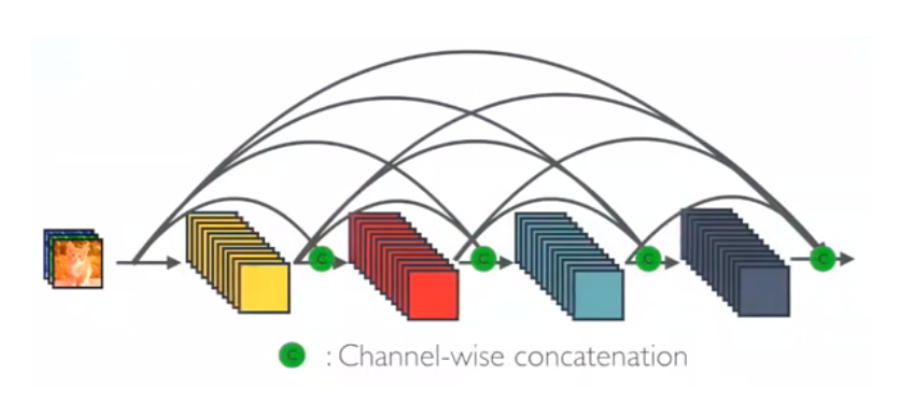

Feature map sizes are the same within the dense block so that they can be concatenated together easily.

In DenseNet, each layer obtains additional inputs from all preceding layers and passes on its own feature-maps to all subsequent layers. Concatenation is used. Each layer is receiving a “collective knowledge” from all preceding layers.

Since each layer receives feature maps from all preceding layers, network can be thinner and compact, i.e. number of channels can be fewer. The growth rate k is the additional number of channels for each layer.

The paper proposed different ways to implement DenseNet with/without B/C by adding some variations in the Dense block to further reduce the complexity,size and to bring more compression in the architecture.

1. Dense Block (DenseNet)

- Batch Norm (BN)

- ReLU

- 3×3 Convolution

2. Dense Block(DenseNet B)

- Batch Norm (BN)

- ReLU

- 1×1 Convolution

- Batch Norm (BN)

- ReLU

- 3×3 Convolution

3. Dense Block(DenseNet C)

- If a dense block contains m feature-maps, The transition layer generate $\theta $ output feature maps, where $\theta \leq \theata \leq$ is referred to as the compression factor.

- $\theta$=0.5 was used in the experiemnt which reduced the number of feature maps by 50%.

4. Dense Block(DenseNet BC)

- Combination of Densenet B and Densenet C

2. Trasition Layer

The layers between two adjacent blocks are referred to as transition layers where the following operations are done to change feature-map sizes:

- 1×1 Convolution

- 2×2 Average pooling

Points to Note:

- it requires fewer parameters than traditional convolutional networks

- Traditional convolutional networks with L layers have L connections — one between each layer and its subsequent layer — our network has L(L+1)/ 2 direct connections.

- Improved flow of information and gradients throughout the network, which makes them easy to train

- They alleviate the vanishing-gradient problem, strengthen feature propagation, encourage feature reuse, and substantially reduce the number of parameters.

- Concatenating feature maps instead of summing learned by different layers increases variation in the input of subsequent layers and improves efficiency. This constitutes a major difference between DenseNets and ResNets.

- It achieved a top-5 error rate of 6.66%

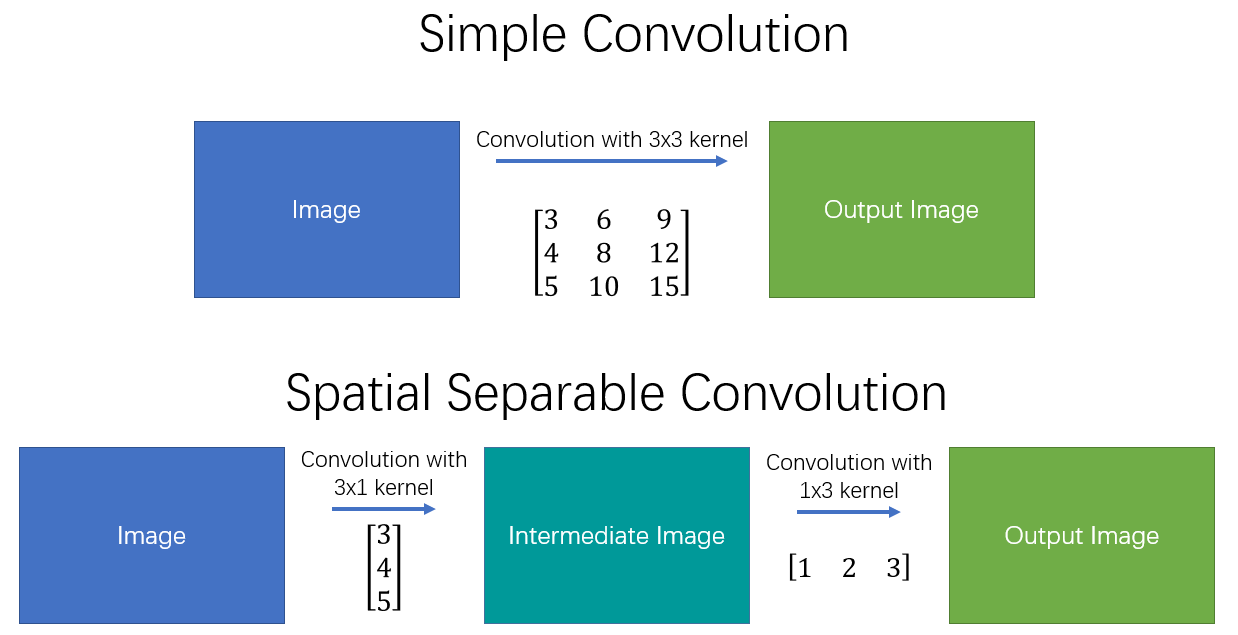

Spatial Seperable Convolution

Divides a kernel into two, smaller kernels

Instead of doing one convolution with 9 multiplications(parameters), we do two convolutions with 3 multiplications(parameters) each (6 in total) to achieve the same effect

With less multiplications, computational complexity goes down, and the network is able to run faster.

This was used in an architecture called Effnet showing promising results.

The main issue with the spatial separable convolution is that not all kernels can be “separated” into two, smaller kernels. This becomes particularly bothersome during training, since of all the possible kernels the network could have adopted, it can only end up using one of the tiny portion that can be separated into two smaller kernels.

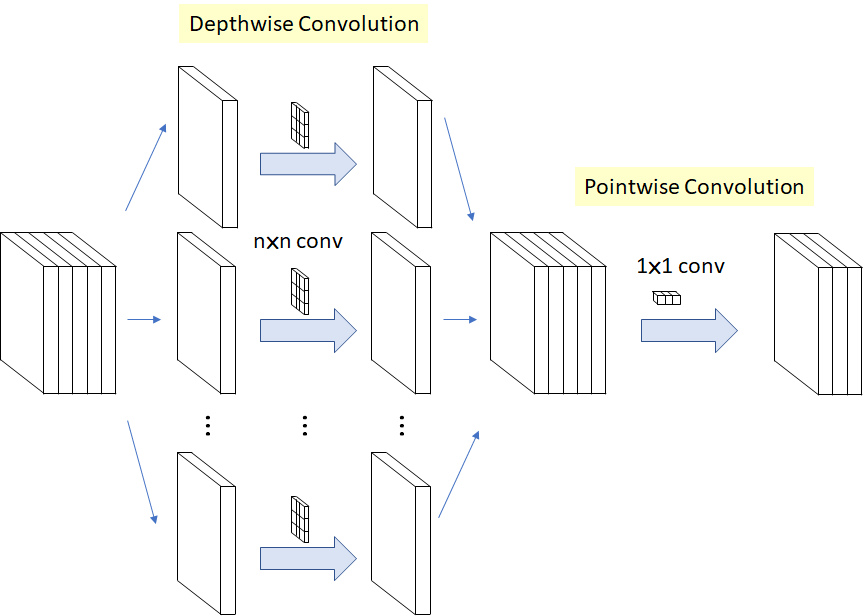

Depthwise Convolution

Say we need to increase the number of channels from 16 to 32 using 3x3 kernel.

Normal Convolution

Total No of Parameters = 3 x 3 x 16 x 32 = 4608

Depthwise Convolution

- DepthWise Convolution = 16 x [3 x 3 x 1]

- PointWise Convolution = 32 x [1 x 1 x 16]

Total Number of Parameters = 656

Mobile net uses depthwise seperable convolution to reduce the number of parameters

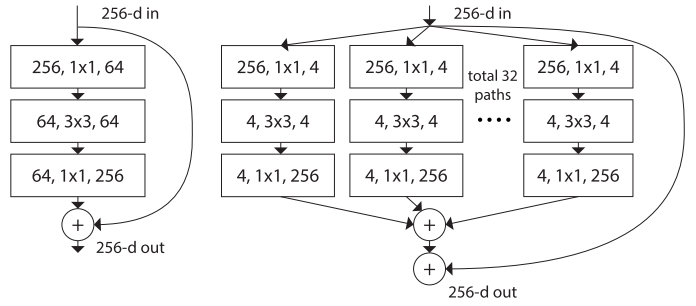

left: a building block of [2], right: a building block of ResNeXt with cardinality = 32

left: a building block of [2], right: a building block of ResNeXt with cardinality = 32